Featured Faculty

Assistant Professor of Management and Organizations; Assistant Professor in the Department of Computer Science (CS), McCormick School of Engineering (Courtesy)

Yifan Wu

As artificial intelligence (AI) makes it increasingly simple to generate realistic-looking images, even casual internet users should be aware that the images they are viewing may not reflect reality. But that doesn’t mean we humans are doomed to be fooled.

The process by which diffusion models—AI systems that transform text to images—learn to create images tends to generate what Matt Groh calls “artifacts and implausibilities”—that is, instances when the computer model doesn’t grasp underlying reality of photographic scenes.

“These models learn to reverse noise in images and generate pixel patterns in images that match text descriptions” says Groh, an assistant professor of management and organizations at Kellogg. “But these models are never trained to learn concepts like spelling or the laws of physics or human anatomy or simple functional interactions between buttons on your shirt and straps on your backpack.”

Groh, along with colleagues Negar Kamali, Karyn Nakamura, Angelos Chatzimparmpas, and Jessica Hullman, recently used three text-to-image AI systems (Midjourney, Stable Diffusion, and Firefly) to generate a curated suite of images that illustrate some of the most common artifacts and implausibilities.

“One of the most surprising aspects of curating images was realizing that AI models aren’t always improving in a linear fashion,” Kamali says. “There were instances where the quality, particularly for celebrity images, actually declined. It was a reminder that progress in AI isn’t always straightforward.”

Drawing from this work, Groh and his colleagues share five takeaways (and several examples) that you can use to flag suspect images.

As you peruse an image you think may be artificially generated, taking a quick inventory of a subject’s body parts is an easy first step. AI models often create bodies that can appear uncommon—and even fantastical. Are there missing or extra limbs or digits? Bodies that merge into their surroundings or into other nearby bodies? A giraffe-like neck on a human? In AI-generated images, teeth can overlap or appear asymmetrical. Eyes may be overly shiny, blurry, or hollow-looking.

“If you’re really like, ‘Ah, this is a person that looks a little off,’ check if that pupil is circular,” Groh suggests.

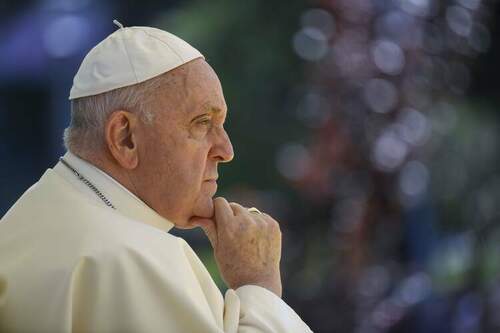

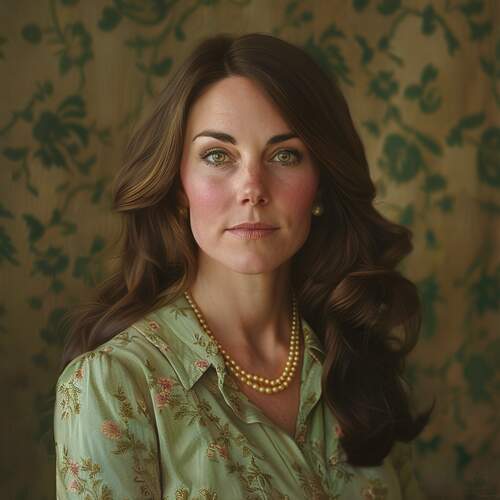

If the photo is of a public figure, you can compare it with existing photos from trusted sources. For example, deepfaked images of Pope Francis or Kate Middleton can be compared with official portraits to identify discrepancies in, say, the Pope’s ears or Middleton’s nose.

“Face portraits are particularly challenging to distinguish because they often lack the contextual clues that more-complex scenes provide,” Kamali says.

And while AI models are generally good at creating realistic-looking faces, they are less adept at hands. Hands may lack fingernails, merge together, or feature odd proportions. That said, he notes, human bodies are wildly diverse. An extra finger or a missing limb does not automatically imply an image is fake.

“There are false positives that happen this way,” Groh cautions. “Sometimes people have six fingers on one hand.”

Another clue that an image is AI-generated is that it simply doesn’t look like a normal photograph of a person. The skin might be waxy and shiny, the color oversaturated, or the face just a little too gorgeous. Groh suggests asking, “Does this have that sheen? Does this just look oversaturated in some way? Does it look like what I normally see on Instagram?”

While it might not be immediately obvious, he adds, looking at a number of AI-generated images in a row will give you a better sense of these stylistic artifacts.

“You’ll start to see that there’s a pattern after just seeing ten of these,” he says. “It’s these things that just look a little Photoshopped, just look a little bit extra. The overperfection.”

This is in part because the computer models are trained on photos of, well, models—people whose job it is to be photographed looking their best and to have their image reproduced.

“They’re the people who are paid to have pictures of them,” Groh says. “Regular-looking people just don’t have as many images of themselves on the internet.”

Other telltale stylistic artifacts are a mismatch between the lighting of the face and the lighting in the background, glitches that create smudgy-looking patches, or a background that seems patched together from different scenes. Overly cinematic-looking backgrounds, windswept hair, and hyperrealistic detail can also be signs, although many real photographs are edited or staged to the same effect. So while these are important clues, they’re not definitive.

“It’s not to say that for sure we know it’s fake,” Groh says. “It’s just a reminder to let your spidey senses tingle.”

And making conscious attempts to steer clear of the trappings of AI-generated images can make identifying real images more of a guessing game.

“It was surprising to see how images would slip through people’s AI radars when we crafted images that reduced the overly cinematic style that we commonly attribute to AI-generated images,” Nakamura says.

Because these text-to-image AI models don’t actually know how things work in the real world, objects (and how a person interacts with them) can offer another chance to sniff out a fake.

A college-logo sweatshirt might have the institution’s name misspelled—or be written in an unconventional font. A diner could be sitting at a table with their hand inside of a hamburger. A tennis racquet’s strings hang slack. A normally floppy slice of pizza sticks straight out.

“More-complex scenes introduce more opportunities for artifacts and implausibilities—and offer additional context that can aid in detection,” Kamali says. “Group photos, for instance, tend to reveal more inconsistencies.”

Zoom in on details of objects, such as buttons, watches, or buckles. Groh says funky backpacks are a classic artifact.

“When there’s interactions between people and objects, there are often things that don’t look quite right,” Groh says. “Sometimes a backpack strap just looks like it merges into your shirt. I’ve never seen a backpack strap that does that. Also, I think your backpack would fall off your back.”

Along similar lines, shadows, reflections, and perspective can also offer clues to an image’s origins. For instance, images created by AI often feature warping or depth, as well as perspective mistakes. Think: a staircase that appears to go both uphill and downhill, or alternatively one that leads to nowhere.

“When it comes to more-complex scenes, it becomes obvious that diffusion models suffer from a lack of understanding of logic,” says Nakamura, “including the logic of the human body and the logics of light, shadows, and gravity.”

In AI-generated photos, shadows often defy the laws of physics. For example, they might fall at different angles from their sources, as if the sun were shining from multiple positions. A mirror may reflect back a different image, such as a man in a short-sleeved shirt who wears a long-sleeved shirt in his reflection.

“Although it would be pretty cool, you can’t look in the mirror and have a different shirt in the mirror than the one you’re wearing on your body,” Groh says.

Here’s another thing AI models don’t have much of: context.

If everything you know about Taylor Swift suggests she would not endorse Donald Trump for president, then you probably weren’t persuaded by a recent AI-generated image of Swift dressed as Uncle Sam and encouraging voters to support Trump.

“When you’re attuned to sociocultural implausibilities, you can sense immediately when something in an image feels off,” Kamali says. “This feeling often prompts a closer examination, leading you to notice other categories of artifacts and implausibilities.”

Lacking cultural sensitivity and historical context, AI models are prone to generating jarring images that are unlikely to occur in real life. One subtle example of this is an image of two Japanese men in an office environment embracing one another.

“Karyn Nakamura is Japanese, and she mentioned that in Japan, it’s very uncommon for men to hug, especially in business settings,” Groh says. “It doesn’t mean it can’t happen, but it’s not a standard thing.”

Of course, it’s impossible for one person to have cultural sensitivity towards all potential cultures or be cognizant of a vast range of historical details, but some things will be obvious red flags. You do not have to be deeply versed in civil rights history to conclude that a photo of Martin Luther King, Jr. holding an iPhone is fake.

“We as humans do operate on context,” Groh says. ‘We think about different cultures and what would be appropriate and what is a little bit like, ‘If that really happened, I probably would have heard about this.’”

Editor’s note: Want more tips and examples? Groh and team have put together a handy report that is worth perusing.

Anna Louie Sussman is a writer based in New York.