Featured Faculty

PepsiCo Chair in International Management; Associate Professor of Management and Organizations; Associate Professor of Sociology (Courtesy)

Michael Meier

Artificial intelligence is poised to be the next disruptive work technology. But as it rapidly spreads across industries and occupations, it’s hard to separate the hype and cynicism from the reality of how it will impact the workplace.

Some observers foresee the technology obliterating careers, leading to mass layoffs and unemployment. Advocates, on the other hand, predict AI will usher in a golden age of labor, freeing up time for workers to spend on more complex and fulfilling pursuits.

The real answer will not be found in headlines, but in research, says Hatim A. Rahman, an associate professor of management and organizations at Kellogg. “We need to understand what trends we are recreating and what trends we want to change.”

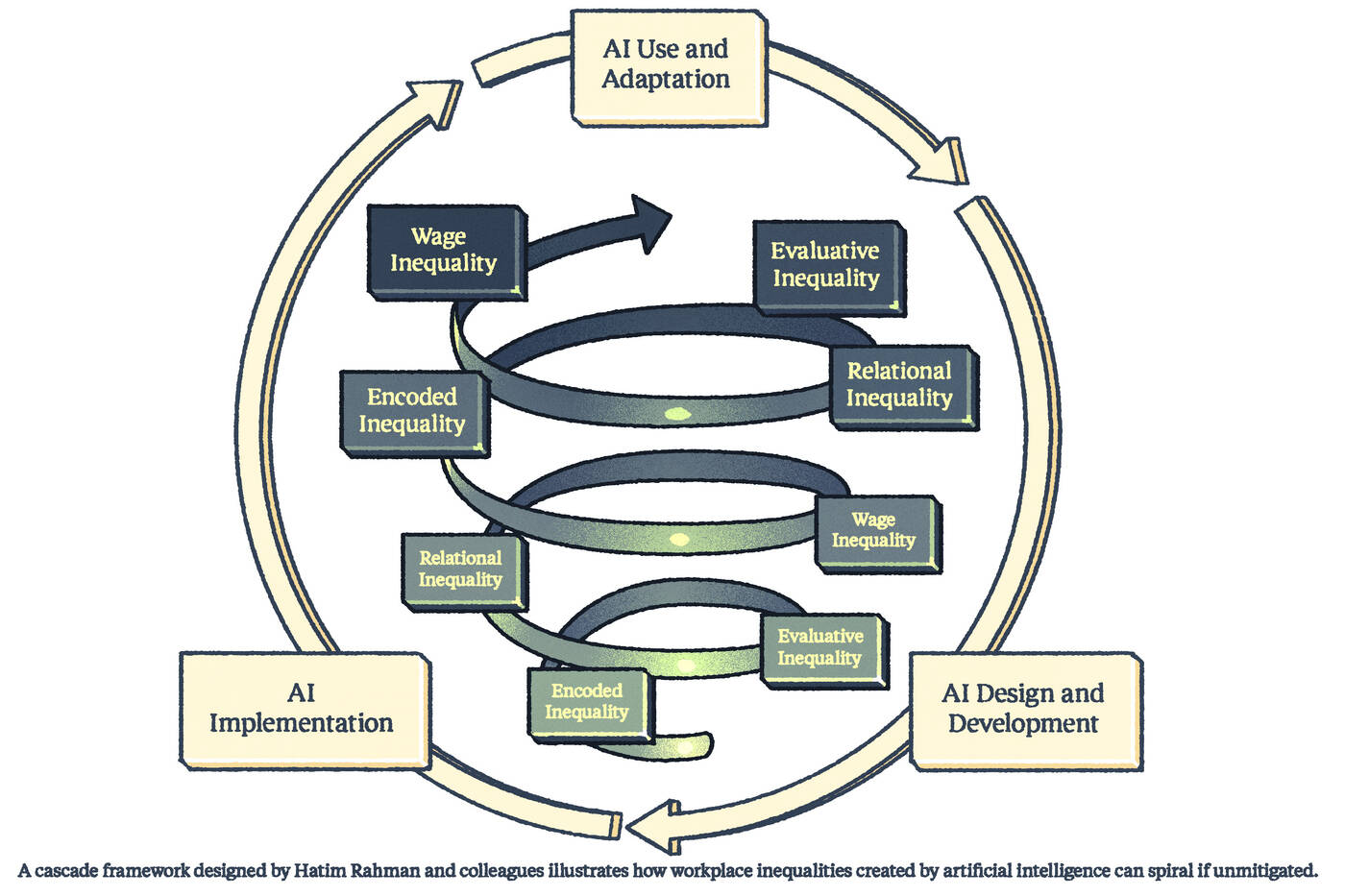

In a new review paper, Rahman and colleagues analyzed over 300 journal articles to grapple with the unwieldy but urgent subject of AI and workplace inequality. By connecting decades of work across disciplines, they created a framework for today’s researchers and scholars to better understand how AI triggers and amplifies inequality in the workplace, much like a cascade.

The metaphor reflects how seemingly small decisions at different stages in the AI lifecycle can rapidly snowball. Choices made during model design, implementation, or use and adaptation can ripple out to exacerbate or reduce workplace inequality.

Instead of looking at just what an AI model does, Rahman says “we should be asking, ‘Who designed it? What were their values and priorities when designing the model?’”

—

Hatim Rahman

By using this framework, researchers can identify the critical points where it would be most effective to intervene and minimize AI harm or amplify its benefits, the authors write.

“The effects of AI are going to play out over several years, if not decades, because it’s complicated and it’s going to vary quite a bit,” Rahman says. “We thought it was really important to be able to link the way that the technology is developed and implemented to these different forms of inequality. That way, we can better understand how to mitigate, redress, and ensure that we don’t recreate recent trends of increasing wage inequality correlated with the spread of new technology such as computers and digitization.”

As Rahman and coauthors Arvind Karunakaran and Devesh Narayanan of Stanford University and Sarah Lebovitz of the University of Virginia surveyed the past literature on how AI and other technologies affect workplace inequality, they found it has attracted researchers from a multitude of disciplines. Once a domain dominated by computer science, AI research now draws experts from economics, psychology, sociology, organization and management studies, philosophy, law, and policy. The authors organized this wide range of views into four distinct perspectives, each focused on different consequences from introducing AI into a workplace:

While these perspectives examine inequality using different expertise, their conclusions often overlap, the authors found. Establishing that common ground can prevent historical mistakes from being repeated.

“For such an important topic, we can’t afford to be so siloed,” Rahman says. “In the past, economists might not have talked to sociologists and vice versa, and they sometimes uncovered the same insights using different methods and terms. So one purpose of this review is to try to genuinely encourage interdisciplinary discussion and bridging of insights related to AI and the workplace that we can all benefit from.”

Instead of treating these four types of inequality as separate potential outcomes of AI, Rahman and colleagues propose linking them sequentially into the metaphor of a cascade. This framework reflects that different points along the AI lifecycle can trigger a series of events that can spiral out of control if left unchecked.

For example, decisions made during the design of an AI model may have unanticipated downstream consequences. A system for screening job candidates trained on resumes from a nondiverse population may lead to discriminatory hiring practices. An evaluation system built to assess the job performance of white-collar workers may score blue-collar workers more harshly, creating wider pay gaps.

Instead of looking at just what an AI model does, Rahman says “we should be asking, ‘Who designed it? What were their values and priorities when designing the model?’ The design and development, at least in my field, is the one area that is most overlooked and very rarely addressed.”

Implementation is another area where the seeds of workplace inequality can be planted. A failure to involve important stakeholders in this process can reduce trust in AI or amplify structural or relational imbalances. Rahman references the ongoing backlash against ChatGPT from artists and teachers who felt like their voices weren’t heard in the early stages of the technology.

“There’s nothing a priori that suggests that ChatGPT and generative AI had to be rolled out in this way,” Rahman says. “Why is it that copyright law wasn’t adhered to? Why weren’t educators more involved before schools got an institutional license [for AI]?”

As a result, many artists and educators perceive AI as a tool for replacing them, instead of a tool they can use to improve their work. This skepticism can deepen inequalities between management and labor, high-wage and low-wage workers, and other groups in the workplace.

“Ultimately, a lot of the decisions of how a technology or AI is going to be used are not tied to the capabilities of the technology,” Rahman says. “[They are] tied a lot more to ideologies, the value that we place on work, and who’s in the room when these decisions are being made.”

Amidst the AI boom, there are countless questions about workplace inequality that researchers can choose to pursue. What kinds of jobs are most affected when the technology is implemented? Does it offer lower-ranking workers the opportunity to “upskill” their jobs, taking on more-complex tasks? What factors inspire workers to experiment with AI instead of reacting with fear and apprehension? What new jobs does it create?

The concept of the AI inequality cascade can help direct attention to the most impactful questions, Rahman says. By focusing on the places in the AI lifecycle where inequalities arise, researchers can help workers and policymakers understand and prevent those consequences.

But to make the most difference, he suggests that they look upstream, at the early design and implementation steps. That’s easier said than done, when commercial technologies are built on closely guarded secrets and randomized controls are implausible.

“There is so much more apparatus for studying downstream effects,” Rahman says. “But inequality is not a simple thing to measure or to tease out, so we need sophisticated theorizing and data collection around it.”

The inequality cascade can also be slowed or minimized by surrounding it with a circle. Applying each of the four perspectives to research on the implementation and adaptation phases can help comprehensively identify how and why inequalities emerge. Experts can then use those insights to inform the design of future AI systems, preventing the same consequences from recurring and spiraling.

And while it seems like AI is moving into the workplace with a speed that doesn’t allow for careful consideration, Rahman says there’s still time for researchers and business leaders to get it right.

“Some of the recent estimates suggest very modest gains in the next 10 years related to AI,” Rahman says. “I think that indicates lots of opportunities and challenges for researchers to really understand and tease out the relationship and the effect AI is having in the workplace.”

Rob Mitchum is the editor in chief at Kellogg Insight.

Karunakaran, Arvind, Sarah Lebovitz, Devesh Narayanan, and Hatim A. Rahman. 2025. “Artificial Intelligence at Work: An Integrative Perspective on the Impact of AI on Workplace Inequality.” Academy of Management Annals.