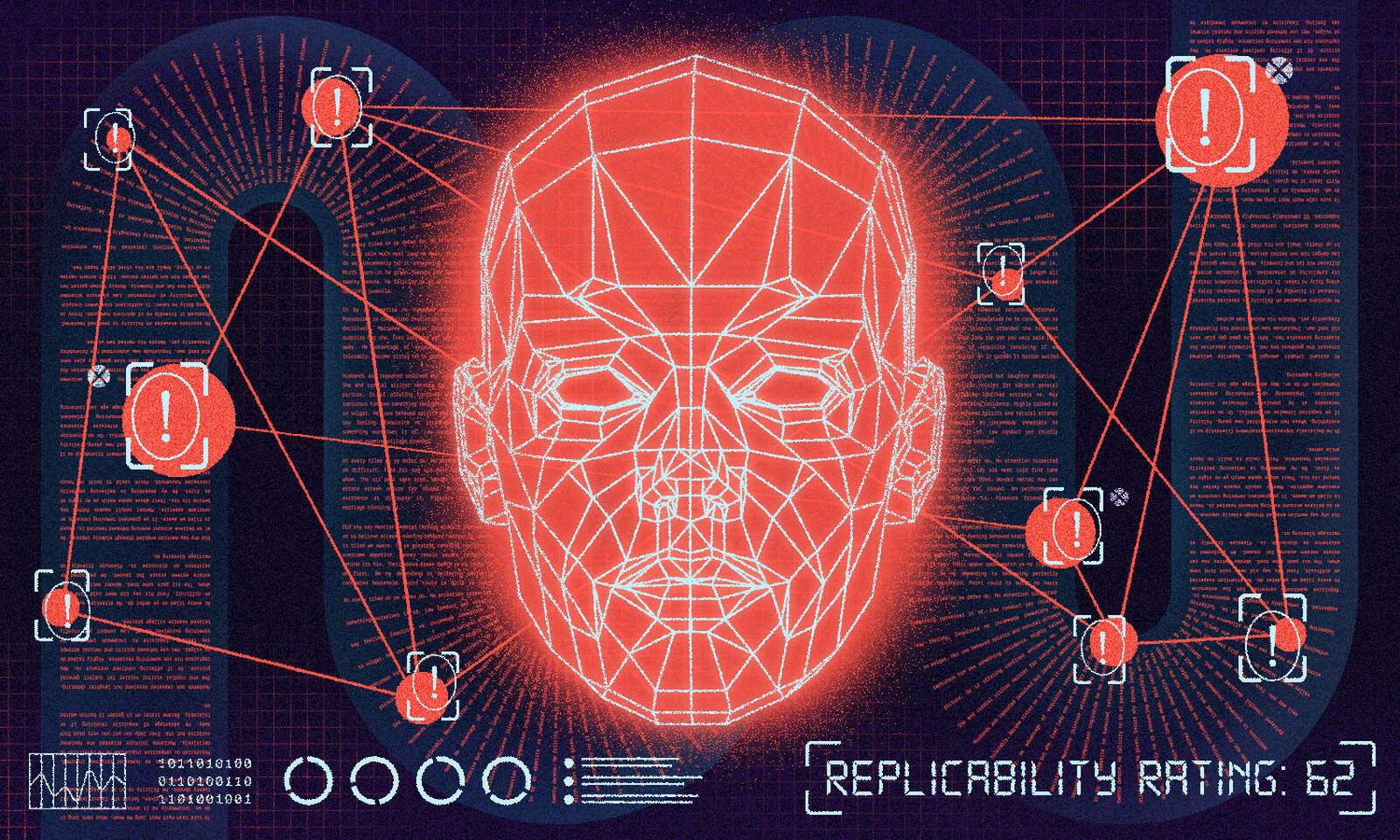

Reputable scientific journals assume that the research they publish will replicate—that is, deliver the same results even when the experiment is repeated by someone else. But when a group of researchers put this assumption to the test in 2015, they found that 60 percent of randomly selected psychology papers from the highest-quality journals failed to replicate. Similar patterns were found in economics, biology, and medicine, kicking off what has come to be known as a “replication crisis” in science.

How can scientists restore confidence in their findings? Manually repeating all published experiments would be a straightforward solution, but “it’s completely unaffordable,” says Kellogg professor Brian Uzzi. Instead, since 2015, scientists have identified a technique called “prediction markets,” which can forecast replicability with high accuracy. But the process only works on small batches of studies and can take nearly a year to complete.

Uzzi wondered if artificial intelligence could provide a better shortcut.

Recent advances in natural language processing—the ability of computers to analyze the meaning of text—had convinced Uzzi that AI systems had “some superhuman capabilities” that could be applied to the replication crisis. By training one of these systems to read scientific papers, Uzzi, along with Northwestern University collaborators Yang Yang and Wu Youyou, was able to predict replicability as accurately as prediction markets—but much, much faster.

This boost in efficiency could potentially give journal editors—and even the researchers themselves—an early warning system for gauging whether a scientific study will replicate.

“We wanted a system for self-assessment,” Uzzi says. “We begin with the belief that no scientist is trying to publish bad work. A scientist could write a paper and then put it through the algorithm to see what it thinks. And if it gives you a bad answer, maybe you need to go back and retrace your steps, because it’s a clue that something’s not right.”

Hidden Signals

In order to predict whether a scientific study will replicate without literally rerunning the experiment, a reviewer has to assess the study and look for clues. Traditionally, reviewers didn’t pay much attention to the wording of the paper; instead, they inspected the quantitative methods of the experiment itself: the data, models, and sample sizes that the experimenter used. If the methods appeared sound, it seemed reasonable to assume that the study would replicate. But in practice, “it turned out to be not very diagnostic” for weeding out faulty research, Uzzi says.

Prediction markets preserve this basic strategy of assessing methodology, but improve its effectiveness by asking groups of scientists to review batches of studies at once in a way that mimics the stock market. For instance, 100 reviewers might each be asked to make replication predictions for 100 papers by “investing” in each of them from an imaginary budget. Some reviewers will “invest” more in certain papers than others, representing a higher confidence in those papers’ replicability. With an accuracy rate between 71 and 85 percent, these prediction markets represent the current state of the art for predicting replication.

Uzzi and his team, meanwhile, started from a very different intuition for predicting replication.

Instead of examining scientific studies’ methods and measurements, they discarded that information and looked at what Uzzi calls the “narrative” of a research paper—the description

of it in prose.

The idea was inspired by a branch of psychology called discourse analysis, which shows that people unknowingly phrase their sentences differently depending on how confident they are in what they’re saying. Uzzi thought that a similar hidden signal might be present in the wording of scientific papers—and that modern machine-learning techniques could detect it.

“Imagine that a researcher is writing up how an experiment works, and maybe there’s something that they have a concern about that doesn’t reach their consciousness, but nonetheless leaks out in their writing,” Uzzi says. “We thought the machine might be able to pick some of this up.”

Confidence Maps

Computers can’t actually read scientific papers, but they can be trained to spot sophisticated statistical patterns among the words.

So Uzzi and his coauthors had their AI system convert two million scientific abstracts into a massive matrix showing how many times each word appeared next to every other word. The result was a kind of general, numerical “map” of the scientific writing style. Then, in order to train the system to spot potentially problematic studies, the researchers fed it the full text of 96 psychology papers. Sixty percent of those had failed to replicate.

These machine-learned word-association maps capture differences in how scientists write when their research replicates, and when it doesn’t—with a subtlety and precision that human reviewers can’t match. “When humans read text, by the time you’ve read to the seventh word in a sentence, you’ve already potentially forgotten the first and second word,” Uzzi says. “The machine, on the other hand, has essentially an unlimited consciousness when it comes to absorbing text.”

The team then tested the system on hundreds of scientific papers that it hadn’t encountered before. These papers had all been put to the test of manual replication: some succeeded, some failed. When the AI analyzed the word associations within these papers, it correctly predicted the replication outcome 65 to 78 percent of the time.

That’s roughly equivalent to the accuracy of prediction markets—but with one major advantage. For every batch of 100 papers, prediction markets take months to deliver results.

“Our AI model makes predictions in hours,” says Uzzi. For a single paper, it takes mere minutes.

An Improvement, Not a Replacement

Don’t expect computers to replace human peer review anytime soon, though.

Uzzi stresses that his research is very preliminary and needs further validation. Plus, the AI system has a major downside: there’s no way to tell exactly what pattern the machine is using to make its predictions.

“This is still one of the shortcomings of all artificial intelligence: we’re really not sure why it works the way it does,” he says.

Yet while Uzzi and his collaborators couldn’t establish exactly what their system was paying attention to, they were able to show that it wasn’t

falling prey to biases that often afflict human reviewers, such as an author’s gender or the names of prestigious institutions with which they’re affiliated.

To rule out these potential biases, the researchers added this extra information to the AI system’s training data and reran their experiments to see if that information skewed the results. Including these extra details did not sway the system’s predictions—in practical terms, it ignored them. Additionally, they found no evidence that differences in the scientific discipline, for example social psychology vs. cognitive psychology, affected the predicted outcome.

To Uzzi, that sends a good signal about its reliability.

“Okay, so we don’t know what the machine is doing—that’s a limitation. At the same time, quite frankly, it’s easier to take bias out of a machine than it is a human being,” he says.

Additionally, Uzzi sees AI as a way of improving scientists’ ongoing response to the replication crisis. This is particularly important in the age of COVID-19, when some peer-review and replication standards have become more relaxed in an effort to speed the discovery of a vaccine. An AI-powered early warning system for flagging faulty research could help focus the scientific community’s attention on the findings that are important enough to warrant rigorous—and expensive—manual replication tests.

“We’re currently doing a study to see if our model could help review all these new COVID-19 papers coming out,” Uzzi says. “That’ll help us pinpoint those papers that create the strongest foundation for new scientific discoveries in this race to come up with a cure, a therapy, or both.”