Featured Faculty

Clinical Professor of Management & Organizations; Executive Director of Kellogg's Dispute Resolution and Research Center

Yevgenia Nayberg

It was caused by 5G technology. Bill Gates invented it. No, the Chinese government did, or maybe a pharmaceutical company. Actually, it’s all a hoax—a mass delusion.

These are just some of the conspiracy theories that began to circulate in the early months of the COVID-19 pandemic. For example, by June of 2020, a Pew Research Center survey showed that 71 percent of Americans had heard one common theory—that powerful actors deliberately planned the outbreak—and 25 percent of respondents said they thought it was “definitely” or “probably” true.

How did COVID-19 conspiracy theories spread so far and wide, and why do so many people believe them? In a new paper, Cynthia Wang, a clinical professor of management and organizations at the Kellogg School, attempts to answer those complicated questions. It’s not her first foray into this territory; Wang has spent years studying conspiracy theories and why some people believe them more than others.

Her latest study is a review of existing research on COVID-19 conspiracy theories and conspiratorial thinking in general. Using this body of work, Wang and her coauthors—Benjamin J. Dow of Washington University in St. Louis, Amber L. Johnson of the University of Maryland, Jennifer Whitson of UCLA, and Tanya Menon of Ohio State University—propose a framework for how COVID-19 conspiracy theories propagated and evaluate methods for preventing their spread.

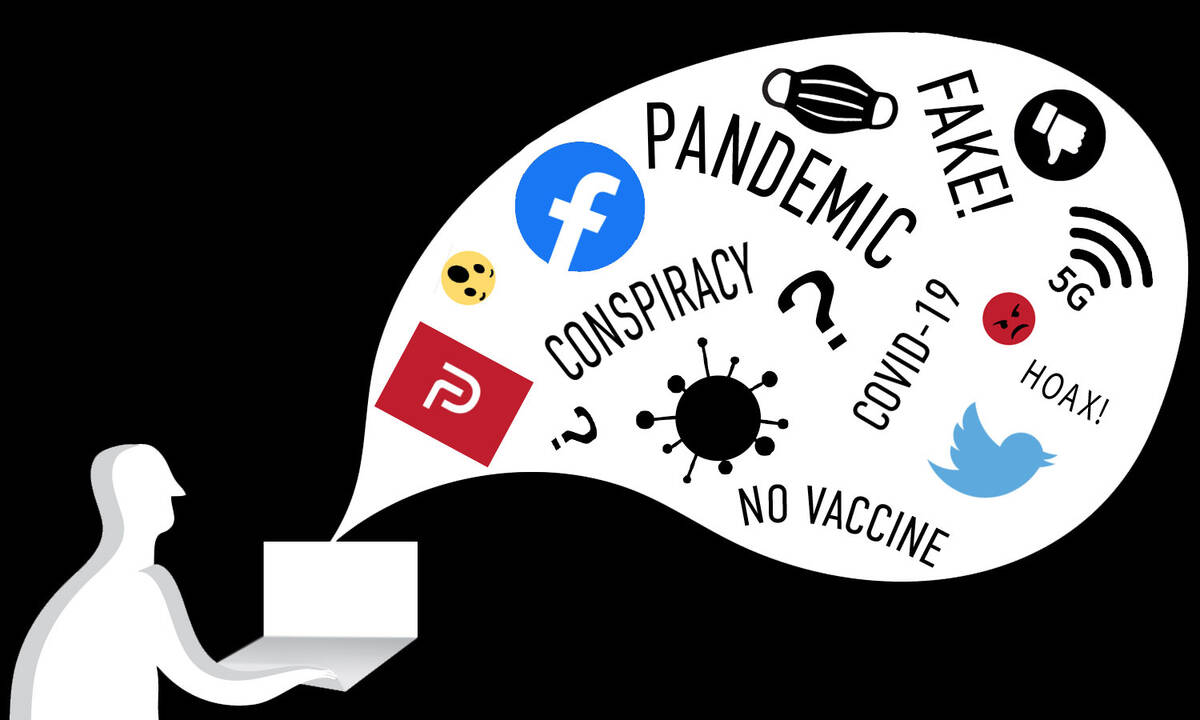

Anyone who’s logged on to Facebook recently won’t be surprised to learn that the researchers believe social media played an essential role in the rise of COVID-19 conspiracies. They argue quarantine-induced social disconnection drove people online, where platforms such as Facebook, Twitter, and YouTube served them a steady diet of misinformation. This resulted in the creation of conspiracy-oriented digital communities that gradually replaced real-life relationships.

It didn’t have to be this way. The word “conspire,” the researchers note at the end of the paper, literally means “to breathe together.” Their study, they write, “shows social media’s ability to focus collective breath in a uniquely potent manner. …The critical question is whether (and how) social media could focus our collective breath in more productive ways.”

Even before the emergence of COVID-19, American culture provided an ideal breeding ground for conspiracy theories. Off-the-charts partisanship and political division “have made it so difficult to be receptive to consuming information that comes from the other side,” Wang says, creating extremism, informational echo chambers, and an “us versus them” mentality—all factors that can make conspiracy theories easy to believe.

Then came the virus. People couldn’t see loved ones, go to work, pursue hobbies, or travel. To Wang, it wasn’t at all surprising that so many Americans turned to elaborate tales of shadowy figures to cope with the upending of their lives.

“A long line of work has shown that when people are in situations where they lack control, they’re more likely to believe in conspiracy theories,” she says. And living through the pandemic has been the ultimate loss of control.

As so much else was taken away, people went online. Global internet activity rose 25 percent in mid-March of 2020, when much of the world went into lockdown. The internet served social and entertainment needs, but also informational ones: with no watercooler chats at the office or impromptu conversations with friends, “where do we go for information? We go online,” Wang says.

The research Wang and her colleagues reviewed shows that our current social-media environment allows misinformation to survive and thrive. That, the team argues, is a major contributor to the COVID-19 conspiracy problem we see today.

Social-media algorithms deserve a share of the blame for (inadvertently) encouraging belief in conspiracy theories. When a user clicks on one conspiracy-oriented post, the algorithm offers up similar posts, often containing yet more false information. “That perpetuates the cycle,” Wang says.

“Bots have been driving public attention without public awareness. And they’re the ones posting the more grandiose conspiracy theories.”

— Cynthia Wang

False posts spread as quickly as true ones, studies show—and sometimes even faster. According to one 2018 study, the top one percent of false posts reaches as many as 100,000 users, while accurate posts rarely break 1,000. Social-media influencers can also help conspiracy theories reach large audiences; one study found that 65 percent of social-media posts containing vaccine misinformation could be traced back just 12 influencers.

Bot accounts, some of which are operated by nation-state actors with their own agendas, add yet more fuel to the fire. “Bots have been driving public attention without public awareness,” Wang says. “And they’re the ones posting the more grandiose conspiracy theories.”

Studies find that once people fall under the spell of conspiracy theories, they are exceptionally hard to dissuade. Here, again, social media plays a key role, Wang and her coauthors point out.

Online communities centered on conspiracy theories provide constant reinforcement for outlandish beliefs in the forms of clicks, likes, and shares. (In fact, there’s some evidence that in some digital circles, not sharing misinformation is socially penalized.) Such groups are also easily discoverable thanks to hashtags, Facebook groups, and simple Google searches.

“Online, we have so much opportunity to search for things to support our case. It just makes [these beliefs] unbelievably sticky and hard to change,” Wang explains.

Fueled by the internet, believers quickly develop their own language (i.e., “plandemic”) and symbols, resulting in a sense of belonging and social identity, the reviewed studies show. Often, the feeling of being united against a common enemy reinforces beliefs and prejudices people already have: discussion of the “Wuhan virus” and “Kung Flu” gave preexisting anti-Asian bias new momentum, leading to real-world violence and harassment.

Once people are under the spell of conspiracy theories, it’s difficult to challenge or debunk their views by offering accurate information. Indeed, some conspiracy believers exhibit a “backfire effect”: when given information that challenges their beliefs, they become even more convinced.

Because conspiratorial thinking is so stubborn, prevention is the best remedy, the researchers believe. But how to prevent the spread of conspiracy theories in the first place is a hotly contested issue.

One option is for social-media platforms to simply ban conspiracy-related content. Research has shown this tactic is effective at slowing the spread of conspiracy theories, but may not eliminate exposure to them altogether. Content restriction is also a blunt instrument, and one that social media companies feel understandably reluctant to implement.

Another, less drastic tactic is “pre-bunking”—essentially, trying to inoculate people against misinformation in a variety of ways: increasing science literacy, exposing people to accurate information before they see conspiracy theories, and raising awareness of the persuasive tactics used by conspiracy theorists. “Those types of interventions do seem to work better than trying to change people’s minds afterwards,” Wang says.

Fighting loneliness and social disconnection can also reduce the appeal of conspiracy theories in the first place. The best option, of course, might be to simply get offline and outside. But even when that’s not possible, the researchers note that tools like Zoom and Skype can help people maintain existing ties. The rise of affordable teletherapy options can also increase access to mental-health services, helping people combat loneliness and stress.

For anyone with a friend or loved one who believes COVID-19 conspiracy theories, Wang suggests keeping the lines of communication open. Offline conversation is best; back-and-forth debates on Facebook lack “a relational component,” Wang says, and rarely change anyone’s mind.

Try to be nonjudgmental, and don’t dismiss the believer or their ideas as stupid. “Conspiracy theories are actually quite complex, so that probably means these people have put a fair amount of thought into it,” she says. Ask calm but probing questions that encourage deeper reflection (“Can you tell me more about why you think that?”) and look for points of agreement in your worldviews where you can.

It’s also OK to admit defeat. You may reach a point where “you just have to agree to disagree,” Wang says. “Sometimes, the beliefs are so ingrained that there’s not much you can do about it.”

Wang sees reason for hope if vaccination rates increase and the virus’ spread is reigned in. “People will feel more under control—and then, hopefully, a lot of the conspiracy theories will start diminishing,” Wang says. “That’s the optimistic part of me.”

Susie Allen is a freelance writer in Chicago.

Dow, Benjamin, Amber Johnson, Cynthia Wang, Jennifer Whitson, and Tanya Menon. 2021. “The COVID-19 Pandemic and the Search for Structure: Social Media and Conspiracy Theories.” Social Psychology and Personality Compass.