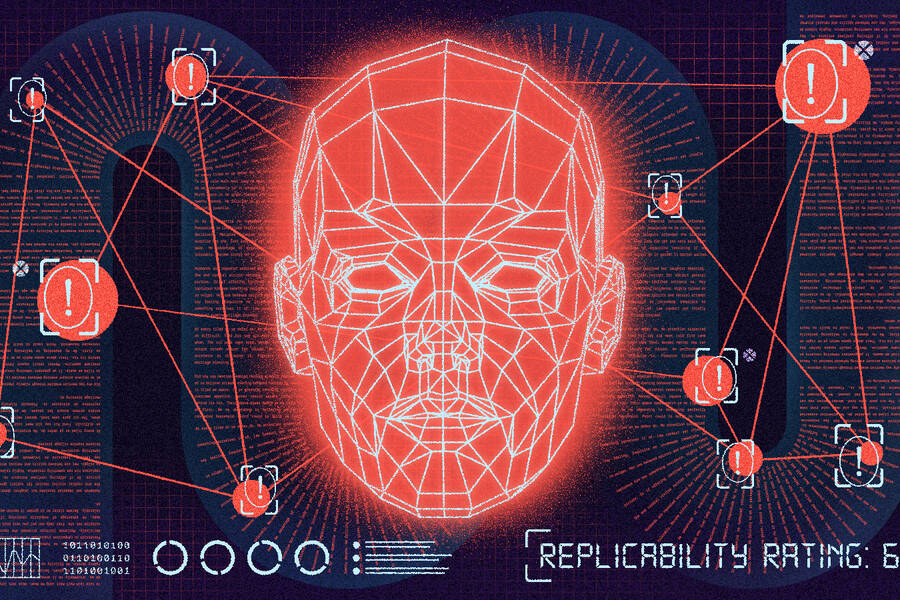

One way scientists gauge this is by showing that a particular study replicates, meaning that if the study is run repeatedly using the same methodologies, consistent results will emerge each time.

Yet, for a variety of complicated reasons, when put to the test, studies often fail to replicate. Indeed, a 2016 poll of 1,500 scientists showed the majority believe science is undergoing a “replication crisis,” where many published results can’t be reproduced. It is estimated that replication failure is the sources of over 20 billion dollars in losses across science and industry each year.

“The thing that makes science science is that it replicates,” says Brian Uzzi, professor of management and organizations at the Kellogg School. “It’s not a fluke. Scientific results can be important for advancement of science or improving people’s lives, and you want to know which results you can count on.”

One challenge in understanding the extent of this replication crisis is that assessments of replicability tend to be done manually, meaning that individual studies must be rerun with a new set of participants. Thus, replication assessments cover only a very small fraction of published work. “The limitation of manual replication for understanding general patterns of replication failure,” Uzzi explains, “is that it’s costly and doesn’t scale.”

Knowing that, he and colleagues looked to design a more scalable approach to replication.

They created an algorithm that was able to predict the likely replicability of the studies contained in a paper with a high degree of accuracy. They then applied their algorithm to over 14,000 papers published in top psychology journals and found that just over 40 percent of papers were likely to replicate, with some specific factors—like the research method used—boosting the odds of predicted replicability.

“We’ve created a powerful, efficient tool to help scientists, funding agencies, and the general public understand replicability and have more confidence in certain types of studies,” Uzzi says.

Using AI to predict replicability

The team, which included Youyou Wu at University College London and Yang Yang at Notre Dame, first had to create an algorithm that was demonstratively good at predicting replicability.

They did this by training the algorithm to identify replicating and nonreplicating papers. They fed the contents of manually replicated papers into a neural network, which provided “ground truth” data about papers that do and do not replicate. Once the algorithm was trained to distinguish between replicating and nonreplicating papers, its accuracy in predicting replicating studies was verified by testing it on a second set of manually replicated papers that the algorithm had never seen before.

Conventional wisdom within science would suggest that the hard numbers within a paper—sample sizes, significance levels, and the like—would be best equipped to predict the research’s replicability. But that was not the case. “The numerical values were basically uncorrelated with replication,” Uzzi says.

Instead, they discovered that training the algorithm on the text of a paper was a more effective way of predicting replication. “A paper may have 5,000 words and only five important numbers,” Uzzi says. “A lot more information about replication could be hidden in the text.”

To test the strength of the algorithm against the best human-based method, the researchers put it up against the gold standard for predicting a paper’s replicability, a “prediction market.” That method uses the aggregated predictions of a large number of experts, say 100, who review a paper and gauge how confident they are that it will replicate in a subsequent manual replication test. Prediction markets work well because they leverage the “wisdom of crowds, but they are expensive to run on more than rare occasions,” Uzzi says.

The researchers then used their algorithm to predict the replicability of past psychology studies that had been manually replicated in laboratories. Their algorithm performed on par with the prediction market with about 75 percent accuracy, “at a small fraction of the cost,” Uzzi says.

Examining 20 years of top psychology papers

Having established the validity of the algorithm, the team then applied it to two decades’ worth of psychology papers to see which factors correlated with the likelihood of replicability.

“We examined basically every paper that has been published in the top six psychology journals over the past 20 years,” Uzzi says. This involved 14,126 psychology-research articles from over 6,000 institutions, from six subfields of psychology: developmental, personality, organizational, cognitive, social, and clinical.

The researchers looked at potential predictors of replicability including research method—such as an experiment versus a survey—the subfield of psychology the research represented, and the volume of media coverage the findings received post-publication.

Running the papers through the algorithm yielded a replicability score for each paper that represented its chance of a successful manual replication.

The challenge of replication

Like earlier manual research, their algorithm predicted an overall low level of replicability across studies: a mean replicability score of 0.42, representing about a 42 percent chance of being replicated successfully.

Then the researchers looked at which factors seemed to influence a paper’s predicted replicability. Some did not seem to matter at all. This included whether the paper’s first author was a rookie or senior scholar, or whether they worked at an elite institution. Replicability, “has little to do with prestige,” Uzzi says.

So what does help explain replicability?

One predictive factor is the study type. Experiments had approximately a 39 percent chance of replicability while other types of studies had approximately a 50 percent chance. That’s counterintuitive because, as Uzzi notes, an experiment is “the best method we have to verify causal relationships,” due to random assignment of participants to conditions, and other controls.

Still, experiments have their own challenges. For example, many scientific journals only publish experiments that reach a certain statistical threshold. This has led to the “file drawer” problem, where researchers who need to publish studies to advance in their careers will (either consciously or subconsciously) submit only those studies about a given psychological phenomenon that happen to cross this statistical threshold, while staying mum about the studies of that same phenomenon that do not. Over time, this can lead to a scientific literature that vastly overestimates the strengths of many psychological effects.

Moreover, the participant pool may be a factor, since many psychology experiments are run on rather homogenous groups of undergraduate students. “The results may not generalize if they are specific to that subpopulation of undergrads,” Uzzi says. For example, “there may be something unique about Harvard undergrads that doesn’t replicate with students from the University of North Carolina or somewhere else.”

The algorithm may pick up on even more subtle predictive factors hidden in the study text of which even the authors weren’t consciously aware. “When you ask scientists to think retrospectively about why their study may or may not replicate, they sometimes say things like, ‘The day we ran the experiment it was raining and participants showed up soaking wet, but we didn’t think that affected the experiment,’” Uzzi says. “But when they wrote up the study they may have unconsciously thought about the effects of the rain, which became expressed as an understated change in the paper’s semantics that AI can detect.”

Some subfields of psychology fared better than others for replicability. For example, personality psychology was rated at 55 percent overall chance of replicability, and organizational psychology came in at 50 percent.

“Organizational psychology is interested in pragmatic outcomes rather than theoretical ones,” Uzzi says. “So their tests and experiments may include things that are more likely to be reproduced because you’ll see them frequently in a real organization, like how people respond to financial rewards.”

Finally, among published studies, the biggest correlate of replication failure is the degree of media attention a study receives. While problematic because media attention is suggestive of an important result, the finding makes sense. “The thing that really generates conversation in the media is a surprising finding or even a controversial one,” Uzzi says. “Those are the kinds of things that may be least likely to replicate.”

Replication and the real world

The findings clearly have implications for researchers and the broader society.

“We offer a low-cost, simpler way to test replicability that is about as accurate as the best human-based method,” Uzzi says. “Researchers and those who review or use research can use data from our tool along with their own intuition about a paper to understand the research’s strength.”

For example, he says, “if the government wanted to reduce suicides in the military—a very big problem right now—with a new therapeutic approach, they can stress-test the study that the therapy is based on prior to a manual replication to help with planning the therapy’s implementation.”

Scientists and grant-makers could also use the tool to design studies that are more likely to be replicable in the first place. “It could be used for self-diagnosis,” Uzzi says. “Before someone submits a paper for peer review, they could run it through our algorithm and get a score. It may give them a chance to pause, rethink their approach, and potentially identify things that need to be corrected.”

The collaborators are working on a website that would enable researchers to do exactly that. “It can be especially useful for one-shot studies such as those that take place over 10 years or that involve subpopulations that are hard to access,” and otherwise infeasible to manually replicate, Uzzi says.

While the researchers focused on psychology, the study’s findings have implications for other fields. “Replication is a serious problem in fields like medicine and economics too,” he says. “We can think of psychology as a use case to help researchers in those areas develop similar tools to assess replicability.”